Kosh Suite - API Framework for Lexical Data

The Kosh Suite is an API-centric, open-source framework designed to efficiently manage and access lexical data.

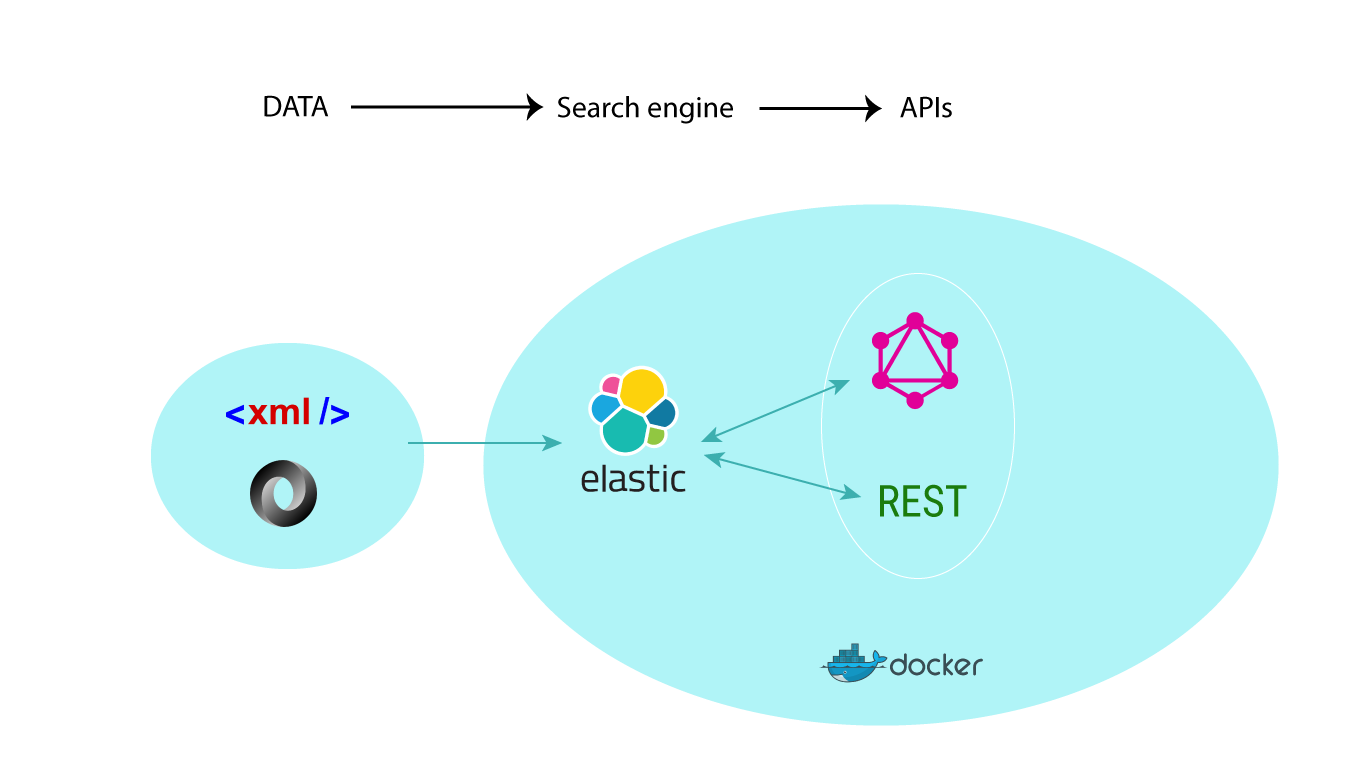

The Kosh Suite is a comprehensive framework for working with lexical data. Its backend kosh has been conceived to provide API access to any XML-encoded lexical dataset, independently of the data model employed.

The name Kosh derives from the Hindi word for dictionary or lexicon, कोश koś or kosh, which in turn derives from Sanskrit कोश kośa with the same meaning.

Features

- Kosh processes lexical data in XML format.

- Two APIs, based on GraphQL and REST, provide access to an Elasticsearch backend.

- All components of the Kosh Suite can be deployed via Docker. Kosh also runs natively on Unix-like systems.

Setup

You can configure Kosh to create APIs for any XML-encoded lexical resource. Learn how to do it for your own data in the deployment section.

References

Francisco Mondaca, Philip Schildkamp, and Felix Rau. 2019. “Introducing Kosh, a Framework for Creating and Maintaining APIs for Lexical Data.” In Electronic Lexicography in the 21st Century. Proceedings of the eLex 2019 Conference, Sintra, Portugal. Brno: Lexical Computing CZ, s.r.o., 907–21. PDF

Francisco Mondaca and Jan Bigalke. 2019. “Introducing an Open, Dynamic and Efficient Access for TEI-encoded Dictionaries on the Internet”. Presentation of a Kosh-based workflow for editing dictionaries and publish them via APIs. TEI Conference Graz 2019.

Francisco Mondaca, Felix Rau, Claes Neuefeind, Börge Kiss, Daniel Kölligan, Uta Reinöhl, Patrick Sahle. 2019. “C-SALT APIs – Connecting and Exposing Heterogeneous Language Resources”. Presentation at the Digital Humanities Conference 2019, Utrecht, The Netherlands.

Contact

If you have any questions, please write us an email: info-kosh[at]uni-koeln.de